DeepSeek is an excellent AI tool. However, for various reasons, AI enthusiasts want to deploy it locally on their own devices. Today, we will guide you to download DeepSeek on different devices to help you achieve a better and more private AI conversation experience.

Is DeepSeek Safe to Download?

Is DeepSeek safe? Based on its privacy policy, there are some uncertainties regarding the management of certain data details. For example, it mentions that user data will be stored on secure servers in China. However, it does not specify how long this data will be retained or whether it can be permanently deleted.

That said, based on many past precedents such as TikTok, Xiaohongshu, and Lemon8, it is highly unlikely that user data on DeepSeek will face any major issues. However, if you post inappropriate content on DeepSeek, your data could still be submitted to the authorities.

Therefore, if you are dissatisfied with DeepSeek’s data management, local deployment on your computer would be a good alternative.

Tip:

Another option for protecting your data is using a VPN, e.g., LightningX VPN. This is a good VPN for AI tools like ChatGPT, Gemini, Claude, and DeepSeek. It supports Android, iOS, Windows, macOS, Linux, Apple TV, and Android TV. You can use it to change location to 2,000 servers in 50+ countries. It is user-friendly, super-fast, and reliable. It now provides a free trial for beginners.

System Requirements for Downloading DeepSeek Models

Here are the basic requirements for running DeepSeek locally on a computer or a mobile device.

Android:

- Processor: Snapdragon 845, Qualcomm Snapdragon 8 Gen 2/3, or equivalent.

- RAM: 6GB, 8GB, 12GB, or higher.

- Storage: 8GB, 12GB, or larger free space.

Examples: OnePlus 6, Samsung Galaxy S9, Xiaomi Pocophone F1, Google Pixel 3, Sony Xperia XZ2

iOS:

- RAM: 8GB, 16GB, or more.

- For smaller models: iPhone 15 Pro and iPad Air with 8GB of RAM.

- For larger models: iPad Pro with 16GB of RAM.

Windows:

- Operating system: Windows 10 or later.

- RAM: At least 8GB of RAM (for smaller models like 1.5B). For larger models, 16GB or more is recommended.

- Storage: SSD with at least 50GB of free space.

- GPU: NVIDIA GPU with CUDA support (e.g., RTX 2060 or higher for better performance).

macOS:

- Operating system: macOS 11 Big Sur or later.

- Processor: Multi-core CPU (Apple Silicon M1/M2 or Intel Core i5/i7/i9 recommended).

- RAM: At least 8GB (16GB recommended for larger models).

- Storage: Minimum 10GB of free space (50GB or more recommended for larger models).

Download DeepSeek on Android and iOS

To have DeepSeek on your mobile device, you can directly download it from the Google Play Store or App Store, or download the DeepSeek local files to run it offline.

Go to the Google Play Store or App Store

Here are the steps to get the DeepSeek app:

- Search for DeepSeek in the Google Play Store or App Store on your mobile device.

- Download and install it on the device.

- Done. You can then sign up for a DeepSeek account, turn on the R1 model, and start a journey on DeepSeek.

Run DeepSeek Offline on the Mobile

Downloading DeepSeek locally on mobile devices requires terminal emulators such as PocketPal AI (for Android and iOS), Termux (for Android), or Termius (for iOS). For beginners, PocketPal AI is the easiest to use. Here’s how to use it.

- Install PocketPal AI from the Google Play Store or App Store.

- Open the app and tap “Go to Models” at the bottom right of the screen.

- Tap the “+” icon at the bottom right and then “Add from Hugging Face”. Then, you’ll see all AI models from the Hugging Face library.

- Search for “DeepSeek” from the bottom bar and you’ll see all the DeepSeek AI models.

- Download the model that suits your device. 7B is a moderate one. Also, you can check the device requirements we mentioned above.

- After downloading the file, return to the “Models” page to check it.

- Tap on “Settings” under the downloaded file and set the token limits (in the N PREDICT section) to 4096 (for a better generating and understanding environment for DeepSeek). Then, tap “Save Changes”.

- Tap “Load” next to “Settings”.

- Done. Now you can interact with the localized DeepSeek model with the graphical UI provided by PocketPal AI.

Using Ollama to Download DeepSeek on Windows/Mac/Linux

Ollama is one of the most beginner-friendly tools for running LLMs locally on a computer. Also, using Ollama to set up DeepSeek on Windows, macOS, and Linux is almost the same. The setup includes two major steps.

Step 1. Download the Ollama Desktop

Go to Ollama.com and click on the Download button in the upper right corner. Choose your operating system. For Windows, you can install Ollama directly. However, on macOS, since the downloaded file is in .dmg format, you need to drag the Ollama icon to the Applications folder to complete the installation.

For a Linux environment, follow these steps:

Copy and paste the following commands into your terminal one by one. Each command serves a different purpose: The first command installs Ollama; The second command starts the Ollama service; The third command verifies the installation by displaying the installed version.

- curl -fsSL https://ollama.com/install.sh | sh

- ollama serve

- ollama –version

If Ollama is installed successfully, the version number should appear.

Note: Be cautious when entering code into the Command Prompt, as improper commands may result in data loss.

Step 2. Download DeepSeek through the Command Prompt

1. Launch Command Prompt or Terminal on your computer.

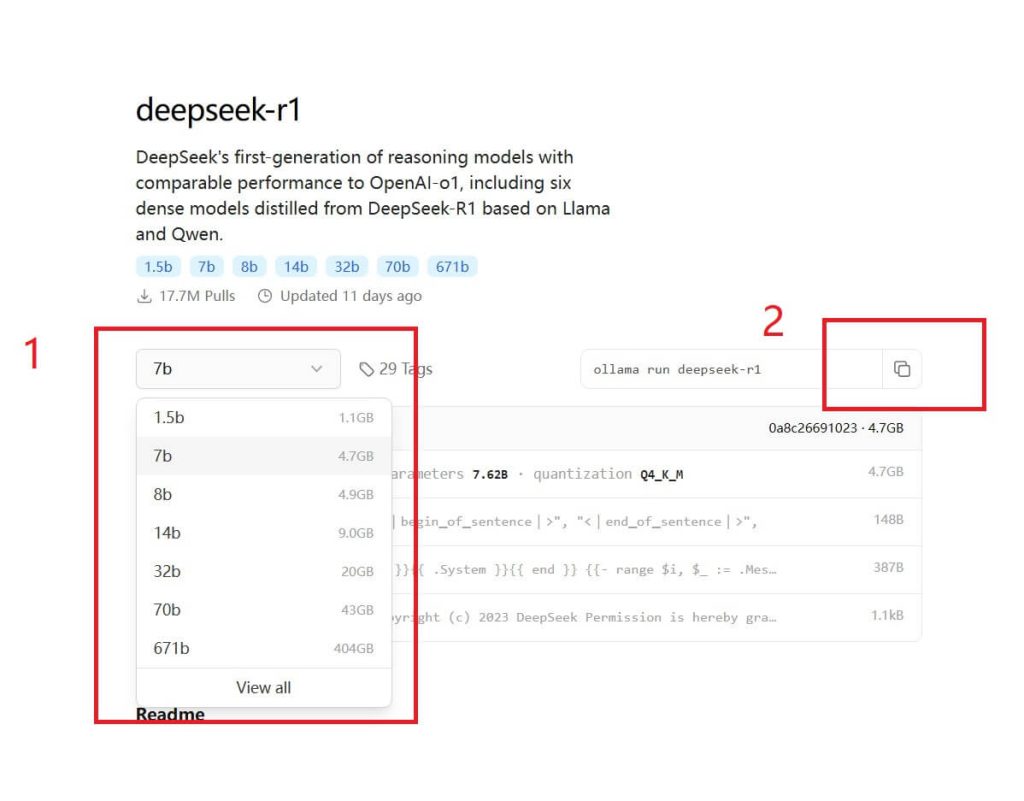

2. Search for the desired DeepSeek model on the Ollama website and copy its code. Ollama’s library now has DeepSeek R1, Coder, V2.5, V3, etc. The specifications required for different parameters are listed in the second part of this article.

3. Paste the code into the Command Prompt or Terminal. Then, wait for the DeepSeek model to be installed.

4. Done. Now you can type prompts to interact with the DeepSeek AI model.

5. To quit the conversation, press the Ctrl + D keys.

Step 3. If You Want to Chat with DeepSeek in a Web UI

If you want to chat with the localized DeepSeek model in a user-friendly interface, install Open WebUI, which works with Ollama.

1. Install and set up Python and Pip on your computer.

2. Open Command Prompt or Terminal and paste the below command to install Open WebUI:

pip install open-webui

3. Run the DeepSeek model via Ollama. Taking DeepSeek R1 as an example:

ollama run deepseek-r1:1.5b

4. Start Open WebUI:

open-webui serve

5. Go to http://localhost:8080 on your browser.

6. Click “Get started” and enter a username.

7. Done. Now you can chat with the DeepSeek model on the web interface.

8. To stop the conversation, right-click on Ollama in the system tray and choose “Quit Ollama”.

Using LM Studio to Download DeepSeek R1 Distill on Your Computer

LM Studio is also a tool for downloading DeepSeek models like DeepSeek Distill, DeepSeek Math, and DeepSeek Coder. You can go to the model catalog of LM Studio to check the available models.

- Visit LMStudio.ai and download its software (0.3.9 or later) on your Mac, Windows, or Linux computer.

- Launch the LM Studio program and click on the search icon in the left panel.

- Under Model Search, select the DeepSeek R1 Distill (Qwen 7B) model and click the Download button. This model makes use of 4.68GB of memory so your PC should have at least 5GB of storage and 8 GB RAM.

- After downloading the model, go to the Chat window and load the model.

- Click on the Load Model button. You can set the GPU offload to 0 to prevent loading errors.

- Done. Now you can use an offline version of DeepSeek on your computer.

How to Uninstall DeepSeek from Ollama and LM Studio

Check the guide below to remove localized DeepSeek from your computer.

Remove DeepSeek from Ollama

Step 1. Open Command Prompt or Terminal on your computer.

Step 2. Stop the Ollama service if it is running.

ollama stop

Step 3. Verify which DeepSeek models you installed:

ollama list

Step 4. Remove the installed DeepSeek model. Taking DeepSeek R1 as an example:

ollama remove deepseek-r1

Step 5. Done. If you can’t delete the model, check the installed model’s name again.

Step 6. Navigate to the file manager to delete the residual files of the DeepSeek model which should be located under:

- Linux/macOS: ~/ollama/models/

- Windows: C:\Users\<YourUsername>\ollama\models\

Remove DeepSeek from LM Studio

Step 1. Go to the LM Studio program.

Step 2. Navigate to the My Models tab on the left panel.

Step 3. Find the DeepSeek model you install.

Step 4. Click on the three dots next to the model’s name.

Step 5. Select Delete and confirm the action by clicking Delete again.

Step 6. Check if the DeepSeek files are deleted:

- Windows: C:\Users\<YourUsername>\LMStudio\Models\

- macOS: /Users/<YourUsername>/LMStudio/Models/

- Linux: /home/<YourUsername>/LMStudio/Models/

Step 7. Done. Now the DeepSeek local files are completely removed from your computer.

Benefits and Drawbacks of Downloading DeepSeek Locally

Advantages:

Privacy and security: All your data will be stored on your device. DeepSeek and others can’t access your sensitive information.

Offline access: Once DeepSeek is set up locally, it doesn’t need an internet connection. Then, you don’t have to worry about the “DeepSeek server busy” issue.

Customization: You can fine-tune or modify the model’s behavior, prompts, and outputs to better suit your specific needs or domain.

No rate limits: You won’t be constrained by API rate limits or usage quotas, allowing for unlimited queries and experimentation.

Cost efficiency: Once downloaded, there are no ongoing costs for API calls or cloud-based inference, which can be expensive for high usage.

Disadvantages:

High hardware requirements: Running DeepSeek locally requires significant computational resources. If your device is low-end, the experience can be awful.

Maintenance: You need to keep the model and its dependencies up to date, which can be time-consuming. Also, if other problems like bugs fall on you, you’ll solve them by yourself.

Energy consumption: running large models locally can consume a lot of power, especially if you use a GPU, which may increase electricity costs.

Conclusion

Overall, just a few clear steps can help you download DeepSeek. Nowadays, more and more AI enthusiasts are exploring how to deploy AI tools locally, such as ChatGPT, Gemini, and Claude. Some of them have little to no knowledge of computers, yet they have gained a lot through this process. If you are also a beginner in computing, reading this article might help you set up your own DeepSeek AI companion.